- Article

How banks can improve customer care now with generative AI

Aside from interest rates, generative AI (gen AI) may be the most frequently used phrase on banks' earnings calls today. And for good reason. It'll boost banks' bottom lines and help them deliver better products and services to customers, outrun competitors, and improve productivity.

Many banks are already using gen AI. Morgan Stanley uses OpenAI's GPT-4 to organize its wealth management knowledge base. And both Goldman Sachs and Westpac use it to help their developers write code. As the technology evolves, thousands of use cases are emerging. So, where should financial institutions start?

Evaluating the use cases

Here's what banks need to know about where they can use generative AI now, what they need to have in place before beginning, and how to mitigate risk.

Assist customer care agents

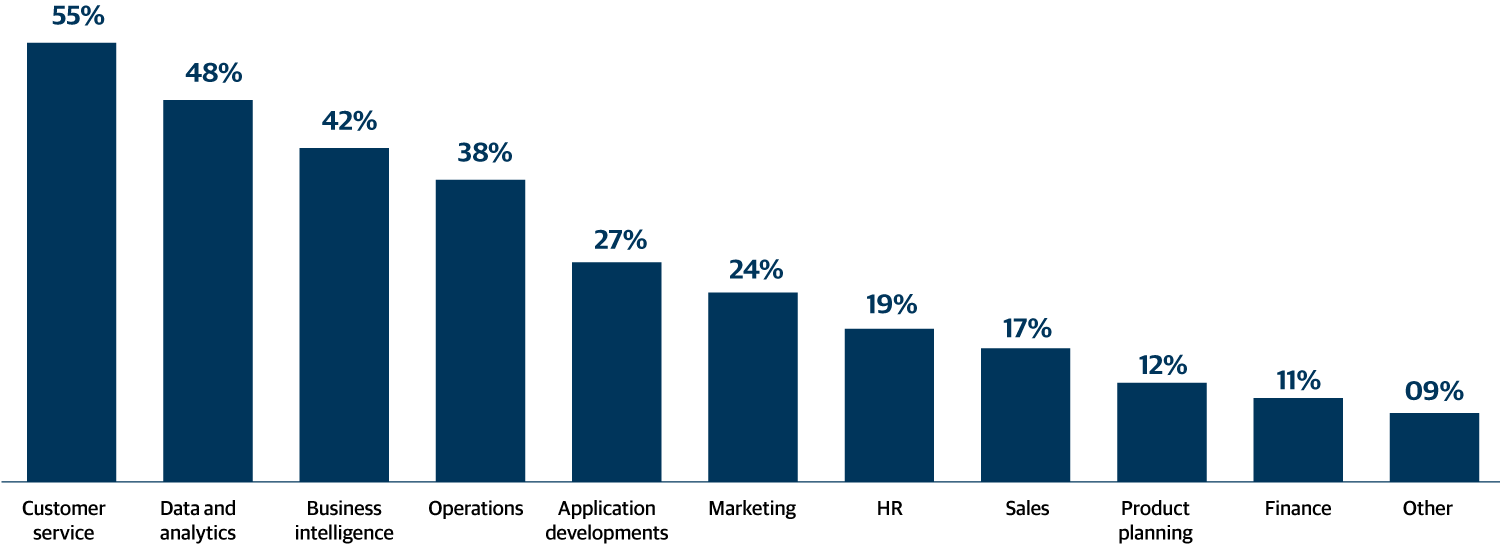

Recent proprietary research from Gartner prioritizes areas where ChatGPT and similar products will add the most value for banks (fig 1). Customer care — but crucially not unsupervised customer-facing activity — leads the way.

Fig 1: Banks are prioritizing customer care use cases for gen AI

Source: Gartner Financial Services Business Priority Tracker

Banks can use generative AI, with human oversight, to immediately and dramatically improve the service their agents provide to customers — for example, by preparing personalized customer insights that help them cross-sell products by rapidly pulling customer data from internal and external sources. The technology synthesizes it and generates a crib sheet, which updates dynamically during conversations or message exchanges.

Gen AI can also drive product and service innovation by scouring large volumes of unstructured data, such as contact center calls, to reveal what kinds of problems customers are having, what products they want, and more.

It can daft rapid, empathetic, context-rich, hyper-local responses in a customer's vernacular when queried — for example, when denying a customer a product or service but encouraging loyalty with an alternative.

And gen AI can provide agents real-time guidance through natural language chat on how to quickly perform complex transactions and resolve even the trickiest of problems — as it does at Commonwealth Bank, where gen AI interrogates 4,500 documents on the bank's policies in real time.

Banks can implement generative AI to better serve customers across the value chain.

Deliver more thoughtful investment recommendations, faster

One of banking's most time-consuming tasks is research from qualitative and quantitative information. Now, generative AI can do most of the heavy lifting.

With simple instructions, gen AI can find, digest, and combine real-time information on actual or potential investments from internal and external sources. It can then generate reports that only need a quick check before forming the basis of, say, a client presentation or a morning market commentary.

Such solutions also enable investment advisors to quickly make more thoughtful buy / sell / hold recommendations to clients in those critical minutes before the opening bell rings. And better investment recommendations mean happier customers.

Reduce the 'time to yes' for commercial loans

In commercial lending, generative AI can help relationship managers and credit officers approve a business-to-business loan approximately 60% faster by ingesting the relevant financial documents and writing a credit memo in seconds. The faster commercial clients can draw down on their loans, the more satisfied they are, leading to more business for the bank.

Accelerate financial crime investigations

While banks already use AI to help them spot financial crime, generative AI can help analysts complete a task in the investigation lifecycle that typically takes up to 30% of their time: narrative writing. For example, Apex Fintech Solutions is using riskCanvas™, Genpact's financial crime software suite with integrated Amazon Bedrock generative AI capabilities, to produce suspicious activity reports at the click of a button.

Five considerations for generative AI

Before banks get started with generative AI, there are things they must consider, such as understanding and prioritizing where it will provide the most value. Banks should also consider deploying cloud-based technical architecture that supports AI at scale, embedding analytics across workflows, ensuring strong data management with governance for responsible AI, and developing a scalable operating model that includes programs to nurture new skills and roles for employees.

These fundamentals will help banks manage the headline risks from generative AI: financial crime, regulation, and the technology itself.

Managing the risks from generative AI

Fight the financial crime fire with fire

Who's more excited than banks about generative AI? Criminals. Fraudsters are using it to easily and rapidly create thousands of convincing and fully documented fake personas and IDs to beat banks' know-your-customer and anti-money laundering checks. This means criminals can open accounts, gain access to lines of credit, and more.

To stay ahead, banks must invest in new ways of validating identities — such as bio-verification, voice identification, and location technology — across every customer touchpoint.

Keep pace with regulation

As regulators come to grips with gen AI's potential uses and implications, different regulatory regimes are emerging around three main issues: transparency, fairness, and consumer protection. But other issues, such as intellectual property, are emerging too. As usual, banks will have to keep pace with an ever-evolving regulatory picture.

Understand the technology's inherent risks

Gen AI itself poses risks. It neither seeks the truth, nor is guaranteed to deliver it. That's why banks won't put it directly in front of customers, for now. While greater transparency can be built into it, the origins of its responses are otherwise a black box without reasoning or references to original sources, and tracing its decisions is hard. It can hallucinate, providing confident but false responses that show no understanding of context. And it must be trained using enough recent, relevant, and unbiased data for its output to be trustworthy.

Banks may solve some of these issues by training their own industry-specific models. Indeed, some are already beginning to emerge. In any event, banks should establish and follow a responsible gen AI framework that applies guardrails to mitigate risks, for example, those that arise due to biases of pre-trained models.

This article first appeared in Global Banking and Finance Review. It was authored by BK Kalra, global business leader, financial services, consumer, and healthcare, Genpact, and Moutusi Sau, vice president and team manager, financial services, Gartner.