- Point of view

Creativity and constraints: A framework for responsible generative AI

Learn how enterprise leaders navigate rapid developments in generative AI responsibly

Generative AI is transforming how companies operate. Like all technologies, though, it is not infallible. For enterprise leaders, demonstrating that AI practices prioritize transparency, fairness, and accountability is critical – especially as the technology becomes mainstream.

But developing a strategy for responsible AI is not straightforward. You must navigate a maze of ethics, copyright, and intellectual property complications. So, by creating a responsible generative AI framework, companies can maintain their reputations by:

- Protecting IP, data security, and gen-AI models

- Understanding how responsible AI differs by region and industry

- Enabling responsible decision-making

Already, we're seeing the consequences of getting responsible AI wrong. Whether it's Samsung workers accidentally leaking sensitive data to ChatGPT or Zoom facing public backlash for incorporating user conversations into training data for large language models (LLMs), the stakes have never been higher.

Read on to learn how to develop and scale responsible AI solutions.

Why enterprises need a responsible generative AI framework

A recent study by MIT Sloan Management Review and Boston Consulting Group found that 63% of AI practitioners are unprepared to address the risks of new generative AI tools. While the fundamental tenets of artificial intelligence development still apply, generative AI creates new challenges. With heavy reliance on visual and textual data, mostly scraped from the internet, there are many ethical implications and potential biases to overcome.

Here are some areas of concern:

- LLM opaqueness and data accessibility: AI developers usually access LLMs – which power today's generative AI tools – through application programming interfaces (APIs). Most models are proprietary, so accessing the training data is nearly impossible. As a result, when a company uses a third-party LLM, software developers often can't check for ethical issues, such as potential biases

- Blurred roles in training data: Pretrained LLMs are highly user-friendly and accessible to those without a computer science background. For instance, with a few YouTube tutorials, employees can build virtual assistants. This means adoption can skyrocket while also blurring the lines between developers and end-users. Consequently, the scope of responsible AI programs must extend far beyond a small group of IT specialists

- Misplaced trust in AI's capabilities: Because LLMs are adept at creating human-like language and being confident in their responses, end-users can misinterpret their outputs as fact and trust them too easily

- Lack of governance: Organizational pressure to rush from AI pilots to production often prevents AI practitioners from establishing robust governance frameworks from the start

With myriad other challenges – like intellectual property rights, security, and privacy – enterprise leaders across industries are taking a step back to reassess their responsible AI strategies.

A new approach to sustainable innovation

Most organizations struggle to find enough talent for generative AI pilot projects. This means spending time on ethical risks – while necessary – often falls to the wayside.

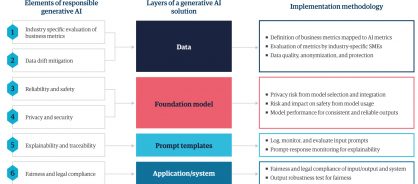

To guide our own AI initiatives, we have built a responsible generative AI framework (figure). It covers the four layers of every gen-AI solution: data, foundation model, prompt templates, and the application or system.

Figure: Genpact's responsible generative AI governance

The framework also covers the six elements needed for responsible generative AI. Let's look at them in more detail:

- Industry-specific evaluation of business metrics: The alignment between business and technology strategy is crucial to the success of any generative AI project. Our framework seamlessly marries business metrics with AI performance metrics, which allows stakeholders to implement solutions with confidence, knowing they can measure and report on success

- Data drift mitigation: To mitigate data drift – the change over time in the statistical properties of data – we have industry subject-matter experts set metrics for data quality, anonymization, and overall performance

- Reliability and safety: Widespread gen-AI hallucinations are a nightmare for enterprises, so we provide guidance on how to select a model and fine-tune it to gain consistent and reliable outputs

- Privacy and security: We use privacy-by-default frameworks that allow organizations to conduct due diligence and enhance transparency. Our approach protects AI systems, applications, and users, expediting the pace and scale of software development

- Explainability and traceability: We introduce auditing mechanisms to validate and monitor gen-AI prompt engineering throughout the user journey. This means outputs are easy to explain and trace

- Fairness and legal compliance: The guardrails in our framework mitigate the risks from biases in pretrained models. For example, our output robustness test for fairness makes sure AI-generated responses meet a range of compliance measures, whether internal standards or new government guidelines such as the AI Act introduced by the European Union

Deliver rapid innovation and enterprise value with generative AI

Case study

Jump-starting innovation in lending with generative AI

Preparing credit packs and annual credit reviews is effort-intensive, time-consuming, and costly. Not only must bank analysts compile and analyze massive datasets, but they must also adhere to a host of regulatory guidelines.

To tackle this challenge, our AI specialists developed a generative-AI-enabled loan origination and monitoring tool that aggregates vast volumes of documents. Using large language models, our solution facilitates data extraction and text segmentation for contextualized data analysis and summarization.

Building on this, our team prioritized explainability. With the solution processing so much sensitive data, we needed a way to pinpoint where the information came from, down to specific sections of documents. So we developed a method for attribution of information, enhancing accuracy and confidence.

In addition, we embedded a responsible AI framework for gen-AI prompt-response monitoring using 25 accelerators, such as an AI governance matrix, machine-learning reliability inspector, data privacy assessor, and more.

With a robust process in place, bank analysts and other stakeholders can now make informed decisions confidently and efficiently.

Creating a responsible culture

A responsible generative AI strategy needs more than a framework. Enterprise leaders must make responsible AI part of their companies' cultures.

Here's how to succeed:

- Build awareness of responsible generative AI: If your framework remains a file on someone's computer and is not shared across the enterprise to become a part of your culture, it won't do any good. Part of your framework needs to be a plan for communicating and enforcing responsible AI throughout the enterprise

- Prepare in advance: Before deploying a gen-AI solution, identify exactly which processes it will impact. Then, create actions for mitigating legal, security, or ethical concerns that could arise throughout these workstreams

- Rally around common benefits: Adopting generative AI requires unique transparency considerations inside and outside your enterprise. Focus on the benefits that unite your stakeholders and be transparent and authentic in your communication

- Prioritize explainability: If generative AI tools aren't accessible, you'll struggle to gain stakeholder trust. Incorporating the right resources, libraries, and frameworks that demonstrate how a generative AI program arrived at its output is crucial

- Embed reliability metrics: Formulate confidence scores for the outputs of generative AI tools and pass them through human evaluation. With humans in the loop, you can incorporate people's expertise, fine-tune algorithms, and improve accuracy. This approach also fosters trust in your AI solutions

The next evolution of AI

Rapid developments in generative AI have caught many enterprise leaders off guard. And the pace of innovation shows no signs of slowing. As new tools come to market, ethical concerns will continue to rise.

But don't let these concerns hold you back. Remember, with every challenge comes opportunity.

Lean on the technology partners with the expertise to help you use generative AI responsibly on your journey toward a more profitable, sustainable, and resilient future.